Contents

Did you know the number of transistors on a chip has doubled approximately every two years since the 1960s? This trend, known as Moore’s Law, is the driving force behind the rapid evolution of modern technology. In this article on the Tech4Ultra Electrical website, you’ll discover how this exponential growth transformed the semiconductor industry, reshaped integrated circuits, and continues to define the future of everything from smartphones to supercomputers.

What is Moore’s Law?

Moore’s Law is the observation that the number of transistors on a microchip doubles approximately every two years, while the cost of computers is halved. This idea was first proposed in 1965 by Gordon Moore, co-founder of Intel, and it became a guiding principle for innovation in the semiconductor industry. While it was never a physical law, Moore’s prediction turned out to be astonishingly accurate for decades, driving an era of exponential growth in computing power.

At its core, Moore’s Law represents more than just a technical benchmark—it captures the speed and scale at which digital technology has evolved. From the early days of bulky mainframes to today’s ultra-thin smartphones, the shrinking size and increasing density of integrated circuits have enabled advancements in every sector: healthcare, education, finance, space exploration, and beyond.

Today, even as physical and economic limits challenge the traditional pace of transistor scaling, Moore’s Law remains highly relevant. It continues to influence research strategies, investment decisions, and the global race toward more powerful and energy-efficient computing. Understanding Moore’s Law helps us grasp not only how we got here, but also where technology may be heading next.

Read Also: Watts Law Explained: Complete Guide to Electrical Power with Examples and Calculations

The Origin and Evolution of Moore’s Law

In 1965, Gordon Moore, co-founder of Intel, published a paper in *Electronics Magazine* that would change the trajectory of technology forever. In it, he predicted that the number of transistors on an integrated circuit would double approximately every year. This observation wasn’t based on abstract theory—it was rooted in real industry trends Moore was witnessing at Fairchild Semiconductor. His bold claim quickly became known as Moore’s Law, and it set the stage for decades of exponential growth in computing performance.

By 1975, Moore revised his forecast to reflect a more sustainable pace of advancement. He adjusted the doubling rate to every two years, which better aligned with manufacturing capabilities and financial feasibility. Despite this change, the industry had already adopted Moore’s Law as a guiding benchmark, influencing both technical roadmaps and business strategies across the semiconductor landscape.

Early on, this doubling effect allowed for rapid innovation in consumer electronics. Computers became faster, smaller, and cheaper. What once filled entire rooms could now fit on a desk. The tech community embraced the law not just as a forecast, but as a challenge—an informal contract that pushed engineers to innovate aggressively. It spurred massive investments in research and development, and companies that couldn’t keep up were quickly left behind.

Moore’s Law evolved from a simple prediction into a self-fulfilling prophecy. It became the heartbeat of Silicon Valley and the standard by which progress was measured. Though not immutable, its influence on the digital age remains unmatched, shaping everything from smartphones to supercomputers.

Visualizing Growth: Data Trends and Graphs Over Decades

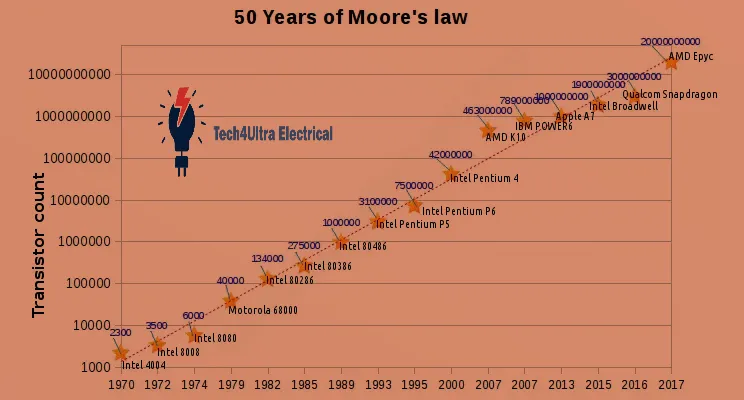

The story of Moore’s Law is best told through data. Since 1971, when Intel introduced the 4004 processor with just 2,300 transistors, the number of transistors on a single integrated circuit has skyrocketed into the billions. For example, Intel’s 12th Gen Core i9-12900K, released in 2021, contains over 21 billion transistors. This growth isn’t linear—it’s distinctly exponential.

If you were to plot this trend on a logarithmic graph, you’d see a nearly straight line—clear evidence of consistent exponential growth. Every few years, the density of transistors doubles, enabling dramatic leaps in performance and efficiency. From the Pentium chips of the 1990s to today’s AI-optimized processors, the pattern has remained astonishingly steady for more than five decades.

Such consistency didn’t happen by chance. It’s the result of relentless innovation in semiconductor manufacturing—advancements in photolithography, materials science, and chip architecture. Companies pushed the limits of physics, squeezing more power into smaller spaces, all while reducing costs.

Visualizing this growth highlights the transformative impact of Moore’s Law. It explains why your smartphone today is millions of times more powerful than the Apollo 11 computer that landed humans on the moon. The steady doubling of transistors is not just a technical feat—it’s the invisible engine behind modern life.

Major Technological Milestones That Enabled Moore’s Law

The sustained pace of Moore’s Law wouldn’t have been possible without a series of groundbreaking innovations in semiconductor technology. Each advancement helped engineers overcome new physical and technical challenges, allowing for continued transistor scaling.

1947 – Invention of the Transistor: At Bell Labs, John Bardeen, William Shockley, and Walter Brattain created the first point-contact transistor. This replaced bulky vacuum tubes and paved the way for compact integrated circuits.

1958 – Birth of the Integrated Circuit: Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor independently developed the first integrated circuits. This innovation allowed multiple transistors to be fabricated on a single chip, igniting the modern electronics era.

1969 – Development of CMOS Technology: Complementary Metal-Oxide-Semiconductor (CMOS) quickly became the industry standard for power-efficient, high-performance chips. It significantly improved transistor density while minimizing heat and energy loss—perfect for upholding Moore’s Law.

1970 – Introduction of DRAM: Dynamic Random-Access Memory, invented by Robert Dennard at IBM, revolutionized computer memory. Its structure was compact and easily scalable, supporting the demand for higher capacity and faster systems.

1980s–Present – Photolithography Advancements: This technique uses light to etch tiny patterns on silicon wafers. Innovations like deep ultraviolet (DUV) and extreme ultraviolet (EUV) lithography made it possible to produce features smaller than 10 nanometers, keeping exponential growth on track.

These breakthroughs—spanning hardware, materials, and manufacturing—were driven by pioneers who continually pushed the boundaries of what chips could do. Thanks to their contributions, Moore’s Law evolved from theory into the heartbeat of digital progress.

Moore’s Law and Its Societal Impact

The effects of Moore’s Law ripple far beyond the world of semiconductors. As transistors became smaller, faster, and cheaper, so did the devices that rely on them—changing the face of education, healthcare, entertainment, and artificial intelligence.

In education, microcontroller platforms like Arduino have made electronics and programming accessible to millions of students worldwide. Thanks to cheap integrated circuits, a complete development board now costs less than a meal—allowing hands-on learning in classrooms and homes alike.

Healthcare has also been transformed. Devices like portable ECG monitors, insulin pumps, and wearable heart-rate trackers are now compact and affordable because of high-density semiconductor chips. These tools have made preventive care more accessible, especially in remote or underserved regions.

In consumer electronics, smartphones represent the ultimate success story of exponential growth. With billions of transistors packed into a pocket-sized device, we can now access the internet, shoot high-resolution video, run GPS, and translate languages in real time—capabilities that were once science fiction.

Artificial intelligence, too, owes much to Moore’s Law. Modern GPUs and AI accelerators thrive on extreme transistor density, enabling real-time object recognition, speech processing, and natural language understanding at scale.

Ultimately, Moore’s Law didn’t just speed up computers—it empowered people, unlocked creativity, and reshaped the way we live and learn.

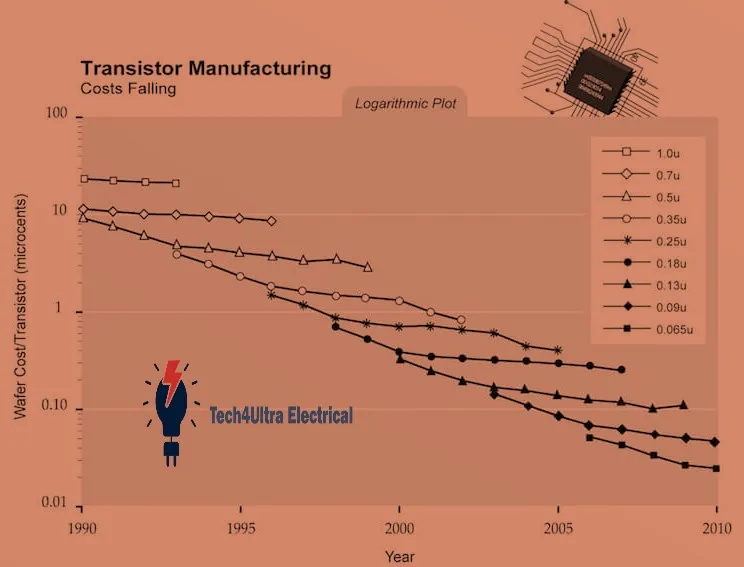

Economic Dimensions: Moore’s Second Law

While Moore’s Law is widely known for predicting the growth in transistor density, there’s a lesser-known counterpart—sometimes called Moore’s Second Law. This principle highlights the steep rise in the cost of chip fabrication as semiconductors become more advanced. Essentially, while performance increases exponentially, so do the costs of achieving those gains.

Venture capitalist Arthur Rock, who helped fund Intel, warned early on that each generation of integrated circuit technology would require far more capital than the last. His prediction proved true. The cost of building a leading-edge semiconductor fabrication plant has surged from a few million dollars in the 1980s to over $20 billion today. For example, Taiwan Semiconductor Manufacturing Company (TSMC) plans to invest $40 billion in its Arizona plants alone.

These escalating costs have reshaped research and development strategies. Only a few global players—like Intel, TSMC, and Samsung—can afford to stay at the forefront of exponential growth. Smaller firms often rely on legacy nodes or outsource chip production entirely.

This shift also raises questions about economic scalability. Governments are now stepping in with subsidies and strategic funding to support national semiconductor ecosystems, recognizing how vital these chips are for economic and technological sovereignty.

Moore’s Second Law reminds us that while technological progress seems fast and free, its underlying economics are increasingly complex and capital-intensive.

Is Moore’s Law Still Alive?

Few questions spark as much debate in the tech world as whether Moore’s Law is still alive. Some experts argue it’s nearing its end, while others believe it’s simply evolving. The doubling of transistors is no longer happening every two years with the same reliability, but innovation hasn’t stopped.

Several factors contribute to the slowdown. Physically, semiconductors are approaching atomic-scale limits—transistors are now just a few nanometers wide. At that size, quantum tunneling and heat dissipation become major challenges. Economically, the cost of new fabrication plants and advanced lithography tools has skyrocketed, as discussed in Moore’s Second Law.

Still, there’s evidence of continuation—just not in the traditional form. Techniques like chiplet design, 3D stacking, and advanced packaging allow manufacturers to improve performance without strictly following the original doubling rule. Apple’s M-series chips, for instance, show significant gains using architectural efficiency rather than sheer transistor count.

Moreover, innovations like EUV (extreme ultraviolet) lithography and gate-all-around (GAA) transistors are helping to push boundaries further. Companies like TSMC and Intel continue to invest billions in next-generation nodes.

In short, Moore’s Law may no longer be a law—it’s a target, a mindset, and a challenge. While the pace has changed, the ambition to make computing faster, cheaper, and more efficient lives on.

Beyond Moore’s Law: The Future of Chip Innovation

As traditional Moore’s Law scaling slows, the future of chip innovation is shifting toward new directions. The focus is no longer solely on packing more transistors onto a chip, but rather on rethinking architectures, optimizing power, and leveraging AI to drive performance gains.

One major trend is the rise of AI-specific chips, like Google’s TPU or Apple’s Neural Engine. These processors are designed from the ground up to handle machine learning workloads efficiently, using parallelism and matrix operations instead of traditional logic. This shift highlights the growing demand for heterogeneous computing—where CPUs, GPUs, and AI accelerators work together in specialized tasks to maximize efficiency.

Energy efficiency is becoming just as important as raw power. In an age of mobile computing and large-scale data centers, reducing energy per computation is a top priority. Innovations in low-power semiconductors, dynamic voltage scaling, and smarter memory access patterns are essential for sustainability and scalability.

Equally crucial is software-hardware synergy. Companies are now designing hardware and software together to squeeze out every ounce of performance. Apple’s success with its M-series chips is largely attributed to this tight integration, where operating systems and applications are optimized for the chip’s architecture.

Looking ahead, collaborative innovation will define the next era. Universities, governments, and industry leaders are forming consortia to share research, reduce costs, and tackle common bottlenecks. Projects like the Open Compute Project and RISC-V initiatives aim to create open, modular ecosystems where innovation can flourish beyond the limits of traditional chip design.

In the post-Moore era, success depends not just on how small we can make transistors, but on how smartly we design the systems around them.

Watch Also: Seebeck Effect: Definition, Formula and Applications

Reinventing the Digital Infrastructure

The digital world still relies, in many ways, on architectural principles developed in the 1940s—namely, the Von Neumann model, which separates memory and processing. While this framework powered decades of growth, it’s increasingly becoming a bottleneck as we hit the limits of traditional integrated circuit and transistor scaling.

To move beyond these constraints, the industry is reimagining its core infrastructure. Quantum computing is one such breakthrough. Instead of binary bits, it uses qubits that can exist in multiple states simultaneously, offering exponential computational power for problems like drug discovery and cryptography. Though still in early development, companies like IBM and Google are racing to bring practical quantum machines to life.

Edge computing is another game-changer. Rather than relying on centralized cloud systems, processing power is distributed closer to where data is generated—like in autonomous cars, industrial sensors, or smart homes. This reduces latency and bandwidth demands, offering real-time insights powered by compact, low-energy semiconductors.

Then there’s neuromorphic computing, which mimics how the human brain processes information. Using spiking neural networks and event-driven processing, neuromorphic chips aim to be ultra-efficient and ideal for AI workloads that require pattern recognition and sensory interpretation.

Collectively, these innovations signal a shift in how we approach computing—not just in hardware, but in system design philosophy. As we rewire the digital foundation, we’re entering a new era where Moore’s Law is less about shrinking parts and more about rethinking possibilities.

Conclusion

Moore’s Law began as a bold prediction but quickly evolved into a driving force behind the digital revolution. For decades, the steady doubling of transistors fueled incredible progress in semiconductors, integrated circuits, and computing power, touching every aspect of modern life—from smartphones to space exploration.

Though the original pace of exponential growth may be slowing, the spirit of Moore’s Law lives on through architectural innovation, software optimization, and cross-industry collaboration. Whether through AI-centric design, energy-efficient chips, or quantum breakthroughs, the race to build faster, smarter, and more efficient systems continues.

As we move beyond transistor scaling, the legacy of Moore’s Law endures—not as a rule, but as a mindset that keeps pushing the boundaries of what technology can achieve.

FAQs

What is Moore’s law on exponential growth of technological advancement?

Moore’s Law states that the number of transistors on an integrated circuit doubles approximately every two years, leading to an exponential growth in computing power. This pattern has driven the rapid advancement of digital technologies since the 1960s.

How has Moore’s law impacted technology?

Moore’s Law enabled continuous improvements in performance, cost-efficiency, and size reduction across devices. From personal computers to smartphones and AI processors, the steady increase in transistor density transformed the semiconductor industry and global innovation.

What is the theory of exponential technological growth?

This theory suggests that technology progresses at an accelerating rate. In computing, it refers to the consistent, rapid increase in capabilities like processing speed, storage, and network bandwidth—largely driven by trends like Moore’s Law.

What is the law of exponential growth in computing?

In computing, this “law” describes how computing power grows exponentially over time, as reflected in the increased density of transistors in integrated circuits. Moore’s Law is the most cited example of this principle, demonstrating how performance scales rapidly while costs decline.

1 thought on “Understanding Open Circuits: Definition, Behavior, Resistance, and Key Comparisons”